The AI Evolution: Google Unveils Gemini 3

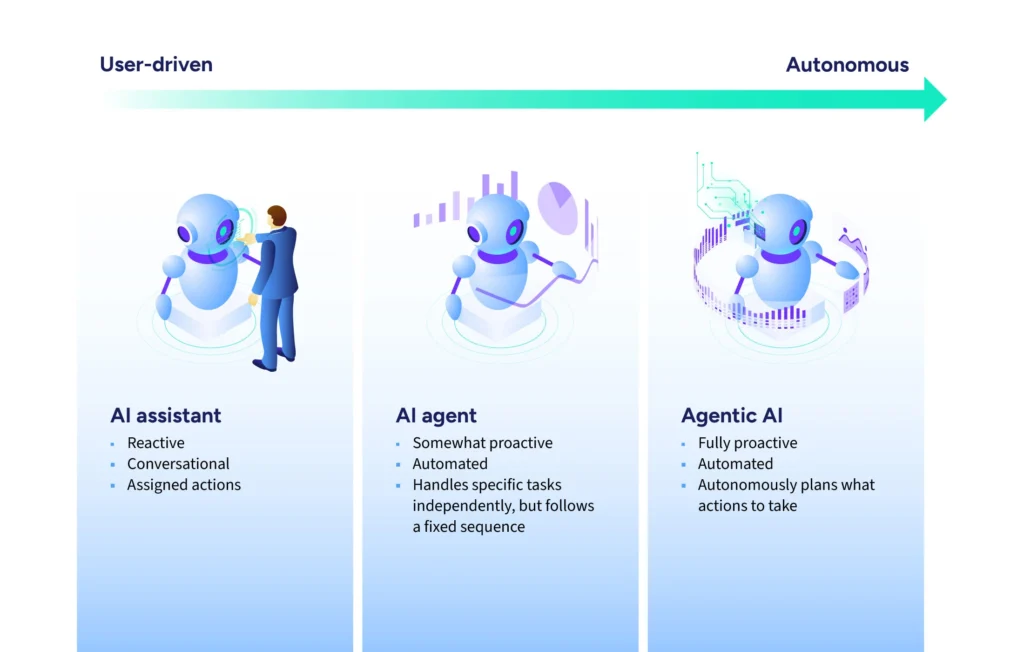

Google has taken a significant step in the race toward Artificial General Intelligence (AGI) with the rollout of Gemini 3 AI Model, its most advanced and capable model to date. Dubbed a “state-of-the-art” system by CEO Sundar Pichai, Gemini 3 marks a major evolutionary leap, showcasing groundbreaking abilities in reasoning, multimodal understanding, and agentic coding.

This new model is not merely an incremental upgrade; it represents a foundational shift in how AI can process and synthesise information. By excelling in complex problem-solving and nuanced contextual understanding, the Gemini 3 AI Model is poised to transform everything from enterprise workflows to everyday interactions in the Gemini app and Google Search.

State-of-the-Art Reasoning: PhD-Level Intelligence

A primary focus for the Gemini 3 AI Model is dramatically improved reasoning. The model has shattered previous benchmarks, demonstrating a level of analytical depth previously unseen in large language models. This isn’t just about faster answers—it’s about deeper, more reliable, and more nuanced insights.

Benchmark Triumphs: Gemini 3 has topped the LM Arena leaderboard, showcasing PhD-level reasoning on challenging academic tests like the GPQA Diamond and Humanity’s Last Exam.

Complex Problem-Solving: The model is highly capable of solving multi-layered problems across diverse subjects, including advanced science and mathematics, with high reliability.

🌐 Multimodal Mastery: Understanding the World

One of the most powerful features of the Gemini 3 AI Model is its unparalleled multimodal understanding. Built from the ground up to synthesize information across different types of data, Gemini 3 can now process and connect text, images, video, and code all at once.

This capability unlocks transformative real-world applications:

Visual-Spatial Analysis: It can understand visual and spatial information, such as analyzing a complex graph or deciphering and translating handwritten recipes from a photo into a digital cookbook.

Video Comprehension: The model can analyse lengthy video lectures or complex factory floor footage, generating structured notes, interactive learning materials, or step-by-step explanations.

Advanced Context: With an industry-leading 1 Million-Token Context Window, the Gemini 3 AI Model can consume and reason over massive amounts of information, such as an entire codebase or a three-hour multilingual meeting, outperforming previous generations on long-context tasks.

💻 Agentic Coding and Dynamic Interfaces

For developers and enterprises, Gemini 3 is Google’s most powerful agentic coding model yet. It moves beyond simple code generation to become a true workflow assistant.

Google has introduced Generative Interfaces and Dynamic View, which allow Gemini 3 to design and code custom user interfaces in real time from a simple prompt. This enables rapid prototyping of full front-end designs with superior aesthetics and sophisticated components.

Furthermore, the model’s enhanced agentic capabilities enable it to execute complex, multi-step tasks autonomously. For instance, it can plan and execute entire software development workflows, acting like an intelligent ‘junior developer’ under user supervision.

The Gemini 3 AI Model is now rolling out across Google’s ecosystem, including the Gemini app, AI Mode in Search, and developer platforms like AI Studio and Vertex AI, signalling a major leap forward in making truly intelligent, reasoning AI accessible to everyone.